Hot data is necessary for live security monitoring.

Archive data (cold data) is not available fastly. It takes days to make archive data live if the archive data time frame is more than 30 days (in most of the SIEM solutions).

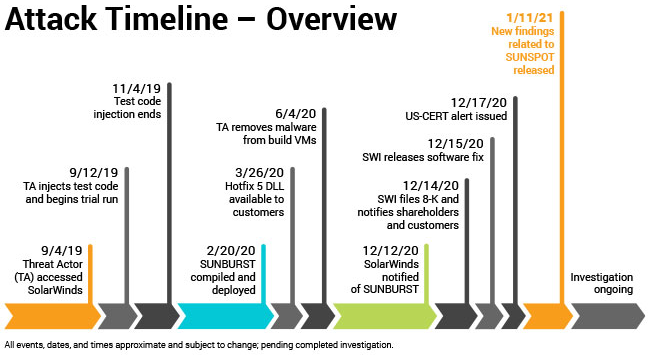

As an example, SolarWinds said the attackers first compromised its development environment on Sept. 4, 2019. So, to investigate the SolarWinds case, we have to go back to Sept. 4, 2019, from now on (July 13, 2021). In this case, we need at least 18 months of live data.

The second example of why hot data is critical is from the IBM data breach report. The average time to identify and contain a breach is 280 days, according to this report.

Hot data gives defenders the quick access they need for real-time threat hunting, but hot data is more expensive than the archive option in current SIEM solutions.

Keeping data hot for SIEM use is inevitably one of the most expensive data storage options.

What are your thoughts about it, dear professionals?

We changed our model to be able to cover such critical long-term cases.

We upload all our critical log sources to AWS S3 for a 3-year retention period. Based on compliance needs we either leave the log files as-is or scrub them from metadata that does not serve any purpose.

In a second pass, we then inject the last 180 days of data into our SIEM. Should the need be we can always search our original log files for required data or re-ingest older data.

This helps us save money while addressing security needs.

@reviewer1469436 Some SIEMs keeps data(log) hot for a long time with minimal disk size. For example, for 10000 EPS and 365 days live (hot), they require 20 TB disk size.This model may be easier than your model and very fast.