What is our primary use case?

I'm using a different infrastructure-as-code engine, Terraform, to create Kubernetes clusters. I specify the machine type and memory requirements in my Terraform configuration, and Terraform sets up the network. With Google Kubernetes Engine (GKE), Google manages the Kubernetes control plane, so I only need to focus on creating and managing nodes. Currently, I'm creating pre-node Kubernetes clusters, including private clusters for security. Workloads can be deployed to GKE using YAML files or the Kubernetes CLI. To expose deployments to end users, I create load balancers. I use cluster autoscaling and HBA host port autoscaling to automatically maintain my workloads at the desired size. GKE also provides various options for load balancing, including ingress. QoS handles credentials using secret resources, and configuration is done using ConfigMaps. The main workflow is to create deployments, ports, services, secrets, and configuration maps.

What is most valuable?

Workloads are automatically manageable, and there's a cluster autoscaling option in Google Kubernetes Engine. It also supports HBA host port autoscaling, maintaining ports at the desired size. You can create a load balancer for different types of service access using ingress. QoS handles credentials with secret resources, and configuration is done through ConfigMaps.

So, autoscaling is the most valuable feature.

What needs improvement?

I would like to see the ability to create multiple notebook configurations. In a cluster, we can create multiple notebooks, which means multiple machine configurations. This would be better because if we have a job that requires high CPU, then we can have a notebook available for that job with a high CPU machine type.

And if we have a job that requires high memory, then we can have a notebook available for that job with a high memory machine type.

For how long have I used the solution?

I have experience using this solution. It's been six to seven months now.

What do I think about the stability of the solution?

Google Kubernetes Engine is very stable.

How are customer service and support?

There's no issue because if I face problems, I just Google it, and I find the solution.

Which solution did I use previously and why did I switch?

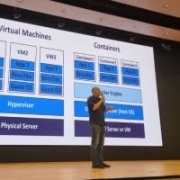

I have previously worked with Docker. I have created and deployed containers using Docker and Docker Hub.

GKE is a managed Kubernetes service that runs on Google Cloud Platform (GCP). It makes it easy to deploy and manage containerized applications on GCP.

How was the initial setup?

You can deploy workloads to GKE using YAML files or the Kubernetes CLI.

The initial setup is very easy.

What about the implementation team?

We can create our cluster using the command line or using our console.

First of all, you have to provide the name of your cluster. And you have to create your default notebook according to your workload. And if you have to provide, if the cluster is either private or public, and the other things that you need to add is like a cluster networking. The security section is also implemented. You have to create to mention if the cluster can be delectable. There's an option for specific, enable, and delete protection.

So, with all these configurations set up using the console or command line, you can either click to create or just hit the command, and your cluster will be deployed on your platform.

Google Kubernetes Engine requires some maintenance. However, most of the maintenance tasks are handled by Google Cloud. For example, Google Cloud will automatically patch the Kubernetes Engine nodes and apply security updates.

What's my experience with pricing, setup cost, and licensing?

Kubernetes is an open-source project, so there is no licensing cost. However, there are costs associated with running Kubernetes in the cloud, such as the cost of the compute resources and the cost of the managed service (if you are using a managed Kubernetes service like GKE).

Which other solutions did I evaluate?

I have worked with App Engine and Cloud Functions. I recently learned about the Data Flow service, which allows you to move data from one source to another in real-time or batch mode. For example, you could use it to count the number of times each word appears in a textbook. You can save the results of your data flow to a Cloud Storage bucket.

Dataflow is a powerful tool for processing large amounts of data. You can also use Dataflow to save your results, such as text or documents, to a cloud storage bucket.

When you run a Dataflow job, Dataflow will process the data from your source, such as a Cloud Storage bucket, and store the results in a bucket that you specify. If you have a real-time data processing need, such as tracking the location of a taxi, you can also use Dataflow to create a real-time streaming pipeline.

What other advice do I have?

Those who want to implement their workload in Kubernetes can create it. It's automatically scalable. So you don't have to maintain your service. It will be automatically adjusted based on your workload and needs.

The other thing is, when you are using microservice kind of development, like, now it is the programming language for microservices. So when we use microservices, it can be easily managed using Kubernetes. It makes it easy to find an error because the solution is really helpful.

And if microservices, the whole application won't fail. Just the deployment notes, that may cause an error in our application. That's the only failure. The whole application won't fail. So it would be helpful. You have to use a microservice kind of development in your development environment and try to implement it as a container and delete the container workloads in Kubernetes. Using deployment or domain service, and our project will be automatically maintained.

Overall, I would rate the solution a nine out of ten.

*Disclosure: I am a real user, and this review is based on my own experience and opinions.